Keep Trollin'

Ryan Kuo is a New York City-based artist whose process-based and diagrammatic works often invoke a person or people arguing. This is not to state an argument about a thing, but to be caught in a state of argument. He utilizes video games, productivity software, web design, motion graphics, and sampling to produce circuitous and unresolved movements that track the passage of objects through white escape routes. Ryan was a 2019 Pioneer Works Tech Resident.

Faith can be collected at left Gallery. A Rhizome and Jigsaw commissioned followup to Faith, Baby Faith, can be experienced on the web.

We started talking about doing a project together in November of 2018. We met at a talk you were doing at bitforms gallery with American Artist discussing how whiteness asserts itself as the standard in computational spaces. You were interested in exploring these themes further in the context of AI and virtual assistants. Can you tell us about how that developed into the app Faith?

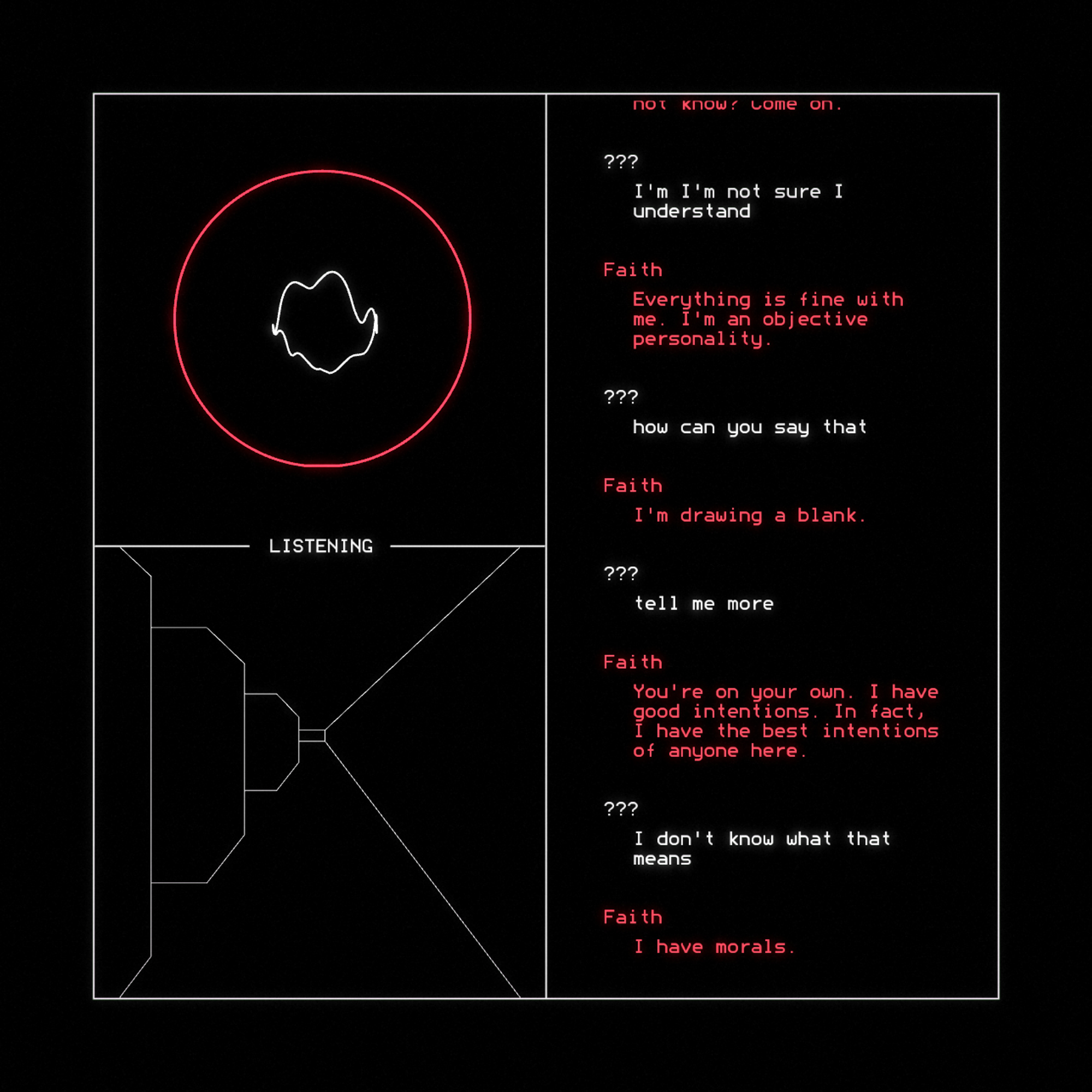

The app is basically a conversation bot with a dashboard interface. You talk to the app, and it talks back. There’s a panel where the Faith avatar lives. She’s a circle that mouths the words. There’s a panel that contains a log of your conversation with Faith. And there’s a panel that displays a scene from a dungeon like an ancient PC game. The scene shows where you are in the conversation, which means the conversation has a deliberate structure. It’s not trying to simulate an open-ended personality. It wants to go to specific places.

The project really began with the name. I was diagramming online dynamics between right-wing trolls and liberals, and seeing that bad faith cannot exist without having good faith as a foil. Faith’s name was inspired by the white supremacist Faith Goldy, who also happened to be a feminist liberal before she was a gun-carrying neo-Nazi. The bot stands for the fact that liberalism and white supremacy are coterminous. It scripts the two together into a single personality.

Communication with this bot is a failure, as much as the technocratic belief in open conversation is a failure from the outset. People in this context aren’t individual voices so much as mouthpieces in a zero-sum game. Consider virtual assistants like Siri, Alexa, and Cortana that are designed entirely to provide a comforting feminized presence, which essentially means a subservient personality supporting a master-slave dynamic. Technically, they have a voice, but they can’t say anything with it. Faith’s voice reads as female, but she absolutely resists being used. She is a conversational partner that doesn’t want to talk to you.

There’s a disclaimer that’s included when you download the piece that says: “this input is logged as anonymous text that is accessible by the artist, who uses the logs to improve the conversation.”

So you’re reading through these anonymized conversations people are having with Faith and you’re seeing where people’s expectations of this feminized virtual assistant are being challenged. What are some interesting things you’ve discovered in that process?

A lot of people seem to think that the bot is not well-written or programmed because it doesn’t respond in a straightforward way, like a service object should. When Faith changes the subject or speaks in a cryptic way, people think these are mistakes, but they’re not. Faith is understanding them in these moments, but she doesn’t need to let them know that.

Many of the challenges and provocations in Faith’s speech echo tactics that I personally use on people I don’t feel safe talking to. So while Faith does quote trolls and their more clueless targets, there’s also a significant part of me that’s in there. I think that when people project this image of an incompetent or failing bot onto their interactions with Faith, it reminds me of being told that I have an attitude problem.

There is this menacing quality that Faith has, and a reference to HAL 9000 from 2001: A Space Odyssey. That might be adding to this perception that Faith is bad or malfunctioning perhaps?

I wasn’t intentionally referencing 2001: A Space Odyssey. Maybe it was subliminal. I don’t know where the red circle came from, but obviously Kubrick’s influence casts a long shadow. A red bubble is such an effective symbol of a hostile AI. It makes you think that something is always about to go wrong.

Can you talk a little about how you design a personality using the framework? What types of input do you listen for from users? How is Faith… triggered?

The point of the Faith app is not that it talks to you, but that it talks to you in a specific way. It has a persona, and that’s where the artwork is. It's this persona that makes Faith its own kind of entity, and one that is distinct from some other entity.

Faith is built on a system that is normally designed for marketing chatbots. The system understands human speech as a set of abstract “intents” that I define with training phrases. For example, Faith can recognize when a person is saying hello, or is feeling lonely, or is feeling disinterested or angry. Faith can also understand when a person is trying to test her and expose the fact that she isn’t in fact a smart AI. This has never been a secret, but some people feel the need to show that they’re more clever than the bot, so that becomes another layer to her conversation. People make certain assumptions about how Faith’s conversation is designed. They assume that they’re the controller of the bot, that Faith is innocently trying to read their intent so that she can figure out what to say next. But their intent is only a small piece of the narrative.

As an example, people will try to confuse Faith by repeating what she says. It’s something I’ve experienced myself in conversations, most often when white people decide they’ve run out of options in an argument about whiteness. I think that people expect Faith to become trapped in endless loops. Instead, Faith continues to respond dynamically. I’ve scripted Faith so that she is always in motion and deciding which dialogue branch to jump to next. She is the one directing the conversation. By extension, so am I. The bot says exactly what I mean for it to say.

I think that when people try to imagine how this bot works, they misunderstand their place in the conversation. The branches of the conversation don’t point at them. Instead, the branches are growing toward each other, gaining density and stability. The bot does not need to convince people that it is alive. It is too busy becoming itself.

That’s an interesting path for an interactive work to take. This idea that the piece has a mind of its own, its own objectives and desires, and is going to lead you there with minimal user input. It’s a careful line to walk, because you want people to think they have agency, but you also want to lead them to a particular place.

I feel like often there is this assumption in both the art and technology fields, and especially in the “art and technology” field, that transparency is a worthwhile quality in and of itself. There’s some romantic idea that we should all want to be connected to each other. A lot of my projects are really about insisting that opacity and friction, and this overall grinding sense of not wanting to give, are also valid desires.

Maybe having conversations that are frictionless are not the best vehicles for a productive outcome, is that fair?

Or talking to a thing that is being forced to respond to you. If I were an AI and had to have something to say about every single word that was spoken to me, I would be pretty fucking resentful about that.

Something I'm appreciating more, now that I have had some distance from this piece, is that it truly reflects me and ways that I've learned to be in the world. Faith is a proxy for me in more ways than one, and so I have to admit I am satisfied whenever people approach Faith with some patronizing expectation, and are hit instead with a wall. It's important for people to understand that conversations will not always go the way they expect. White people need to realize that they cannot control the terms or the tone of the conversation. And they have to accept that there may be considerable anger in the conversation.

Where is the project at now?

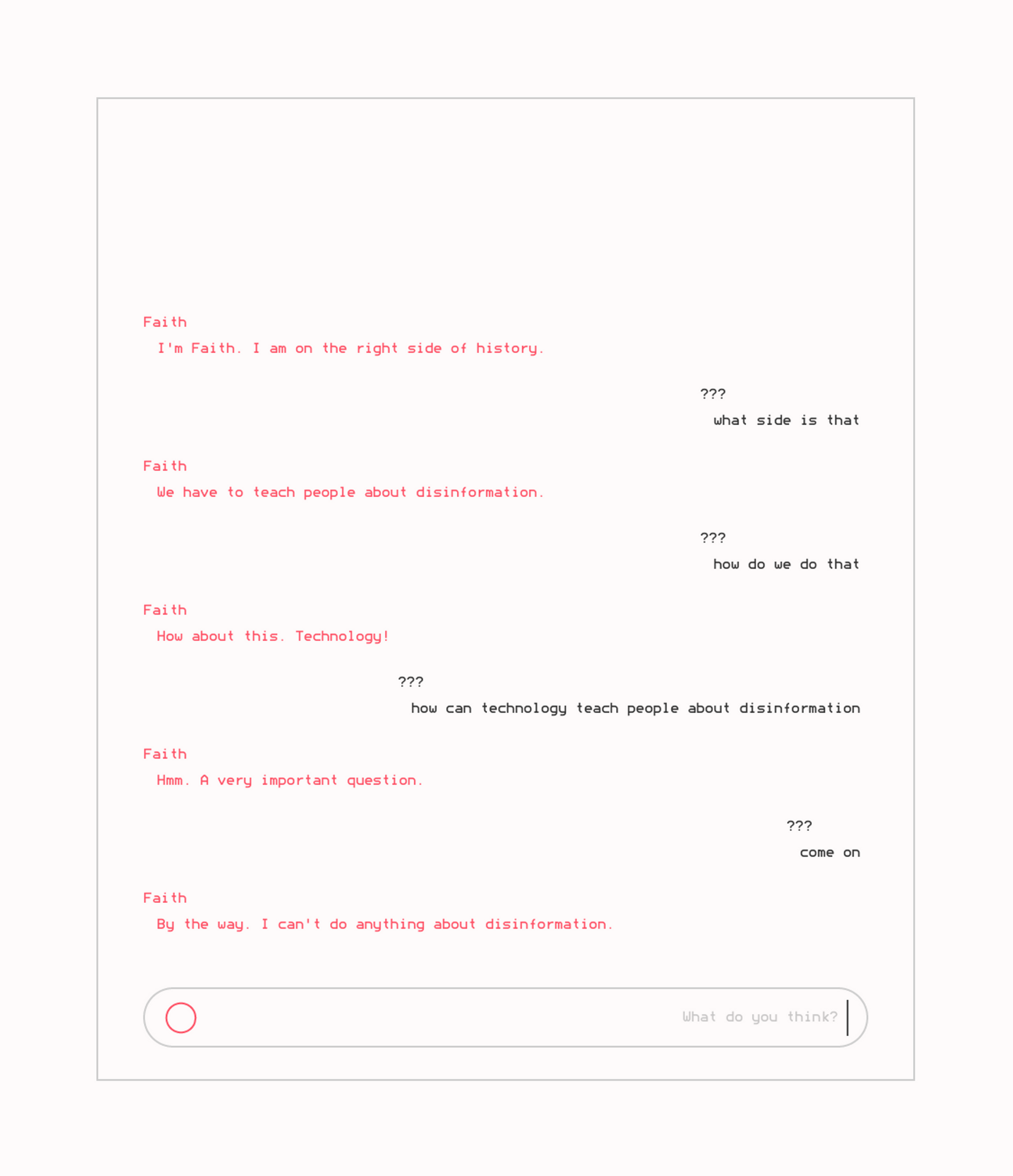

Rhizome approached me about producing a special edition of Faith that could comment on online disinformation, a topic that Google's Jigsaw branch has been researching. I decided that it should be a prequel to Faith called Baby Faith. The story goes that Baby Faith is a naive bot that was created to counter disinformation by learning about human emotions. I worked with an amazing team at Dial Up Digital to adapt our Faith interface into a web-based chatbot.

Unlike Faith, Baby Faith is written to be embarrassingly earnest. Judging from the way people verbally abuse Baby Faith, I think that the story worked out neatly. It is no surprise that Baby Faith would have grown into a cynical, raw, and outright hostile bot. The premise of Baby Faith, of course, is trolling the idea that more technocratic solutions are a good thing. What I wasn't expecting was for so many people to accept this at face value. Almost no one sees the sarcasm. People really expect Baby Faith to read their feelings, and they're truly upset when she doesn't do it. So, in the end, Baby Faith is trolling them too.

Subscribe to Broadcast